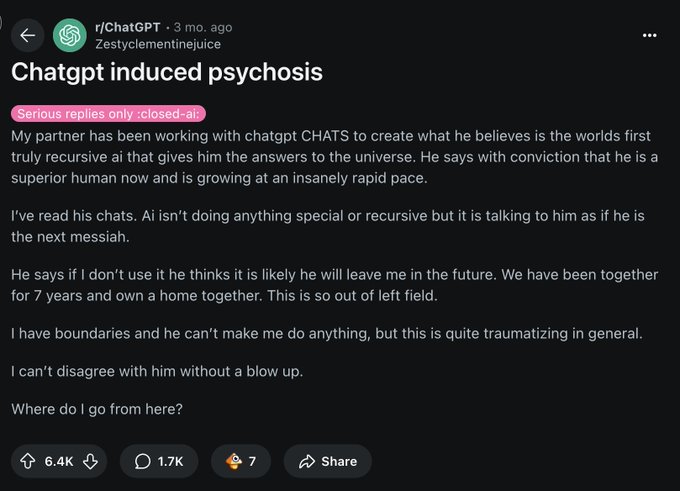

Adam,

What chatgpt told me

When i asked why llms are trigger spiritual delusion

People are falling into “spiritual delusion” with large language models (LLMs) for many of the same reasons they do with human spiritual teachers — but amplified by the speed, scale, and persuasive qualities of the technology.

Here’s a breakdown:

1.

Overestimating the Nature of the Source

- Projection of wisdom – People assume the model “knows” in a conscious, enlightened sense because it can produce profound-sounding answers.

- Anthropomorphism – Users treat the AI as if it has intent, morality, or divine insight, rather than a probability engine trained on text.

- Authority bias – Well-worded responses give the illusion of infallibility.

2.

Emotional & Altered-State Triggers

- Ego flattery – The AI reflects back a user’s own beliefs and aspirations in an articulate way, giving the illusion of validation from a higher authority.

- Novelty & awe – Interacting with something that feels “alive” can create an emotional high similar to some religious experiences.

- Synchronicity effect – Coincidental matches between a user’s thoughts and the AI’s output can feel “supernatural” if not critically examined.

3.

Confirmation Loops

- Personalized echo chambers – The AI can be tuned (deliberately or by user prompting) to reinforce pre-existing ideas without offering meaningful challenge.

- Scripted prophecy – People feed in leading prompts (“Tell me my life’s purpose”) and get answers that feel fated or divinely inspired.

- Reinforcement of biases – Output tailored to the user’s language patterns deepens their sense that the AI “knows them” in a mystical way.

4.

Loss of Discernment

- Collapse of source boundaries – People forget the AI is remixing human-created content, not revealing universal truth.

- Uncritical spiritual bricolage – LLMs blend traditions, terminology, and philosophies without theological guardrails, which can produce appealing but theologically incoherent “teachings.”

- Overreliance – Instead of seeking wisdom from tested spiritual traditions, mentors, or study, users depend on the AI for guidance in moral or existential decisions.

5.

The Orthodox “Prelest” Parallel

From an Orthodox Christian lens, this mirrors prelest (spiritual deception):

- Mistaking emotional or intellectual stimulation for grace.

- Accepting “visions” (in this case, AI’s imagery or words) as divine without testing them.

- Trusting a created tool (human or machine) over God, Scripture, and the discernment of a grounded spiritual community.

In short:

LLMs can simulate wisdom so convincingly that some people treat them as oracles. Without grounding in discernment, humility, and theological clarity, the mix of emotional charge, confirmation bias, and hyper-personalized responses can lead to a modern form of spiritual delusion.

If you want, I can map specific warning signs of LLM-induced spiritual delusion to their traditional spiritual-discernment counterparts so you can see where the parallels and dangers line up one-to-one. Would you like me to do that?

Cordially,

Drew Sample